Q: What is EC2?

A: EC2 is an Infrastructure as a Service Cloud Computing Platform provided by Amazon Web Services, that allows users to instantiate various types of virtual machines.

Q: What is an instance?

A: An EC2 instance is a Virtual Machine running on Amazon’s EC2 Cloud.

Q: What is an AMI?

A: An AMI (Amazon Machine Image) is a preconfigured bootable machine image, that allows one to instantiate an EC2 instance. (EC2 Virtual Machine)

Q: What is an AKI?

A: An AKI (Amazon Kernel Image) is a preconfigured bootable kernel miniimage, that are prebuild and provided by Amazon to boot instances. Typically one will use an AKI that contains pv-grub so that one can instantiate an instance from an AMI that contains it’s own Xen DomU kernel that is managed by the user.

Q: What is EBS?

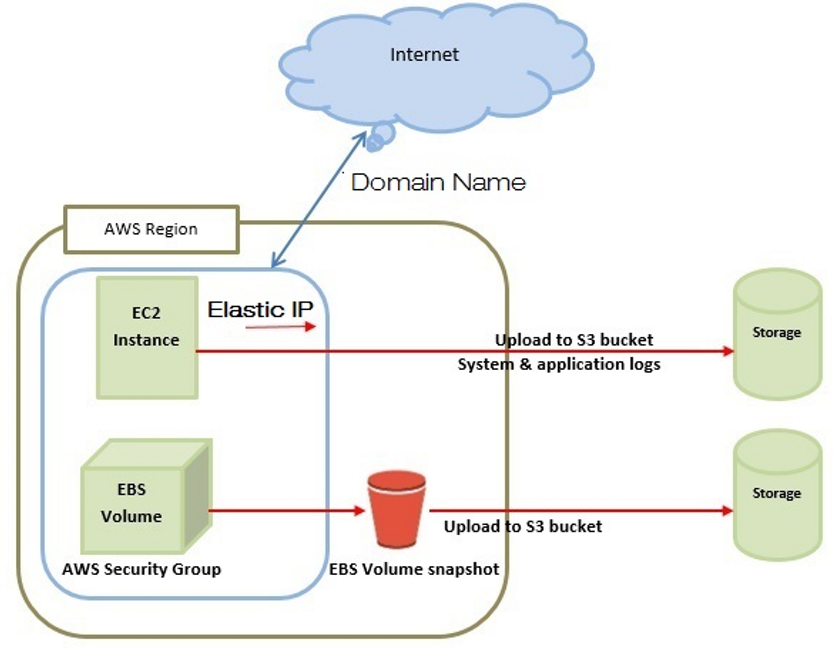

- EBS stands for Elastic Block Storage, and is a service that provides dynamically allocatable, persistent, block storage volumes that can be attached to EC2 instances.

- Most system operations that can be performed with an HDD can be performed with an EBS volume. e.g. – formatted with a filesystem and mounted.

- EBS also provides additional SAN-like features such as taking snapshots of volumes, and detaching and reattaching volumes dynamically.

- One notable feature that SAN LUNs support that EBS volumes do not is muti-initiator. (IE: Only a single EC2instance can be associated with a given EBS volume at a given time, so shared storage clustering is currently not supported.)

Q: What is the difference between an instance-store AMI/instance and an EBS AMI/instance?

Answer:

- An instance-store instance boots off of an AMI that instantiates a non-persistent root volume that loses all data on poweroff, or hardware failure.

- EBS instances boot off an AMI that consists of an EBS volume that persists after powering off (stopping) an instance or in the event of a hardware failure a given instance is running on. EBS root volumes can be snap-shotted and cloned, like other EBS volumes.

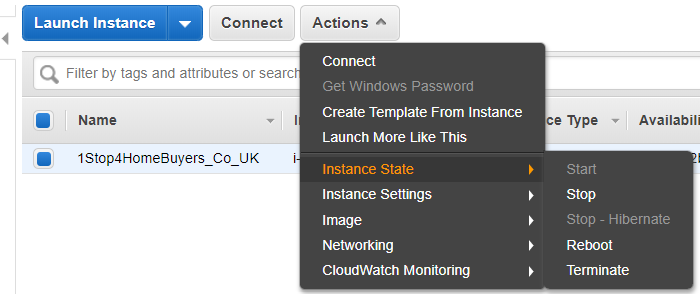

Q: What is the difference between terminating an instance and stopping an instance?

A: Please note this difference is only applicable to EBS-root instances.

- When one stops an instance it basically virtually powers off the instance but it remains in the inventory to be powered on (started) again.

- Terminating an instance removes its records from the system inventory and usually also deletes its root volume.

Q: How does IP addressing work in EC2?

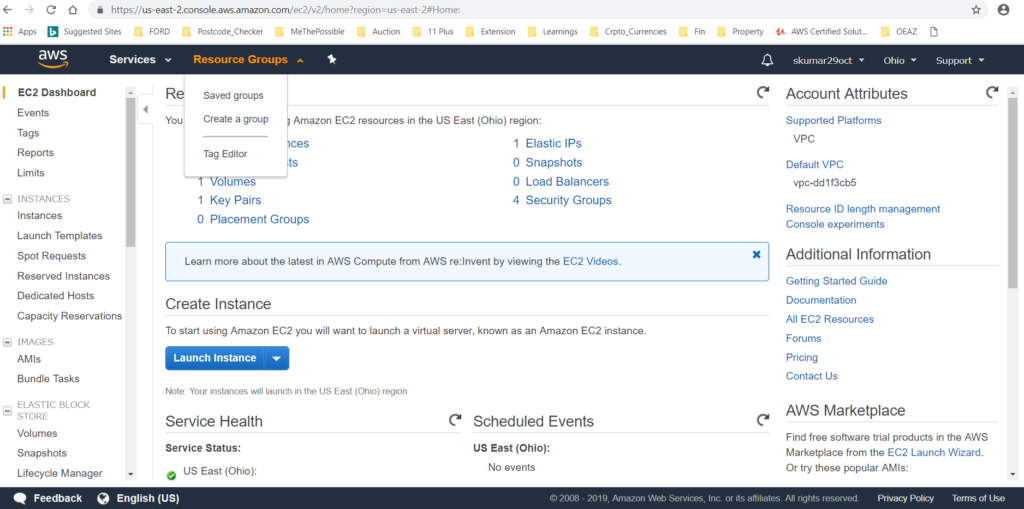

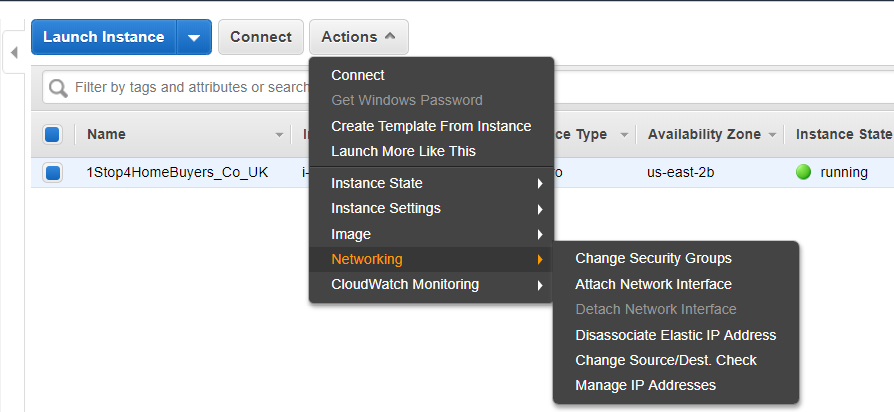

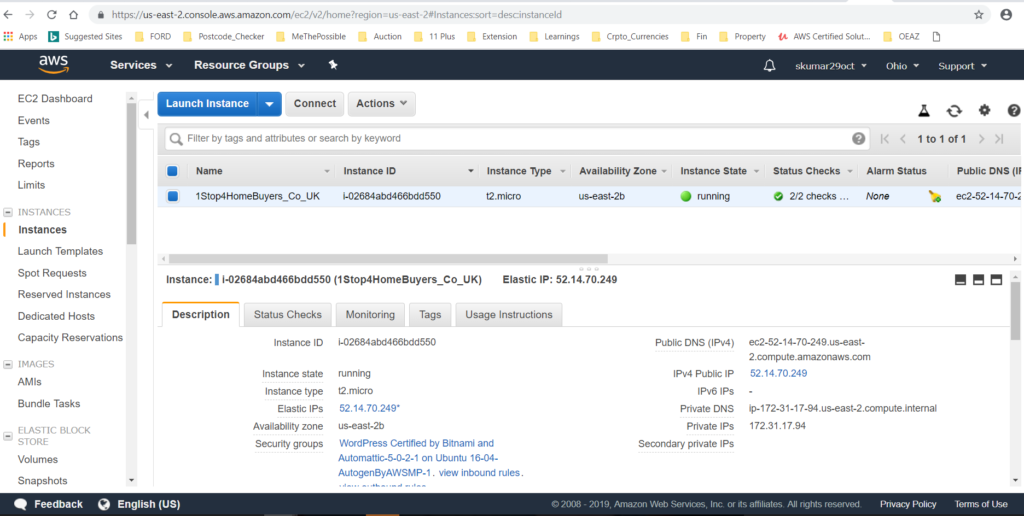

A: Modern EC2 instances typically exist in a “VPC”, or Virtual Private Cloud network. A VPC is a network overlay environment allowing the user to specify various aspects of the network topology including CIDR ranges, subnets, routing tables, and ACLs. Instances are assigned one or more network interfaces in a VPC, each of which can have one or more IP addresses. Publicly routable IPv6 addresses are available. IPv4 addressing is handled using private RFC 1918 addresses and stateless 1:1 NAT for public internet access.

A legacy “classic” networking mode exists in which each instance is given a randomly assigned private IP address that maps via NAT to an also randomly assigned public IP address. Amazon is not provisioning this feature for new accounts. VPC instances allow more control of the private (and public) IP address mappings and assignment, and as such let one assign custom private IP ranges and addresses, in addition to having the option to not assign public IP address mappings.

Q: What is an Elastic IP Address (EIP)?

A: An Elastic IP address is a Public IP addressed that is assigned to an individual AWS account. These IPs are assigned by region. This address can be assigned to any EC2 instance within a region and will replace the regularly assigned random public IP address.

Q: What is an EC2 Region?

A: An EC2 Region refers to a geographic region that is a completely autonomous set of compute resources, with their own management infrastructure. Regions do not share any resources, so they are considered completely separate for disaster recovery purposes.

Q: What EC2 Regions are there?

A: The official list of regions grows with some regularity. In general, the latest Debian AMIs are available in all public regions. There is also the non-public GovCloud region, available only to US Government agencies. At present, Debian AMIs are not published in GovCloud, but users have requested them.

Q: What is an EC2 Availability Zone?

A: An availability zone is a separate “failure zone” within a given region that can have resources instantiated in. Each region has it’s own power grid, and physical set of hardware and resources. Availability zones within a given region have a shared management interface/infrastructure.

Q: What is an EC2 Security Group?

A: A Security Group (SG) is a management construct within EC2 that acts similarily to a network based firewall. An instance must be assigned one or more security groups at first instantiation. Security group membership may not change after initial instantiation. Security groups allow one to set incoming network rules allowing certain TCP/UDP/ICMP protocols ingress via rules based on incoming security group ID, network address or IP address. Security groups do not restrict outbound traffic, nor do they restrict traffic between instances within the same security group. (Assuming they are communicating via their private IP addresses.)

A: Instance metadata is descriptive information about a particular instance, that is available via an http call to a particular instance and that instance alone. e.g. – Public IP address, availability zone, etc. userdata is one of these pieces of data available.

Q: What is userdata?

A: When one instantiates an EC2 instances one may optionally pass 16 KB of data to the API that can be used by the instance. (Typically use cases are running scripts, and/or configuring the instance to meet a particular use case.)

Q: What is cloud-init?

A: Cloud-init is a framework written in Python for handling EC2 userdata to configure a newly instantiated EC2 instance. See upstream project for more details: https://help.ubuntu.com/community/CloudInit

Q: How do I log into a Debian EC2 instance for the first time?

A: When you instantiate an instance from an official Debian AMI, one needs to assign a previously uploaded/created ssh public key, which will be added to the “admin” user’s authorized_keys. One can then ssh in as “admin” and use sudo to add additional users.

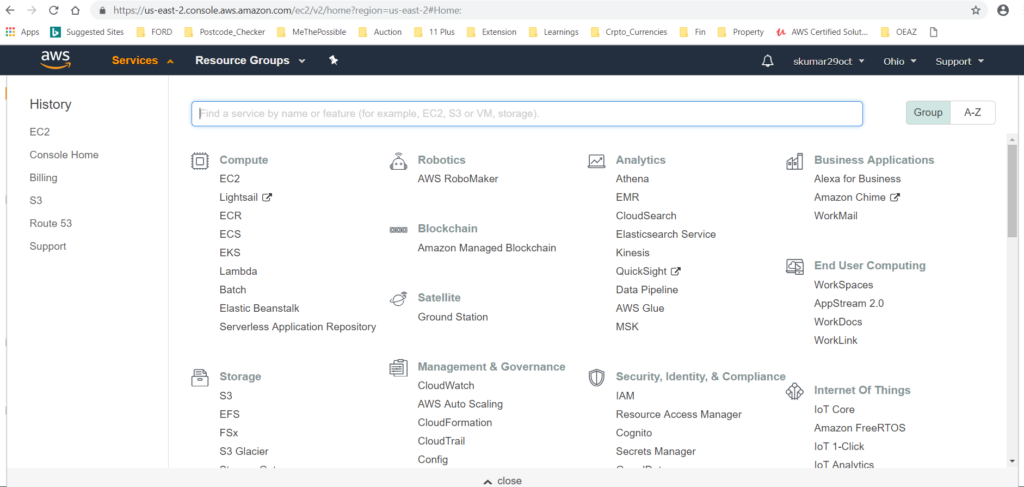

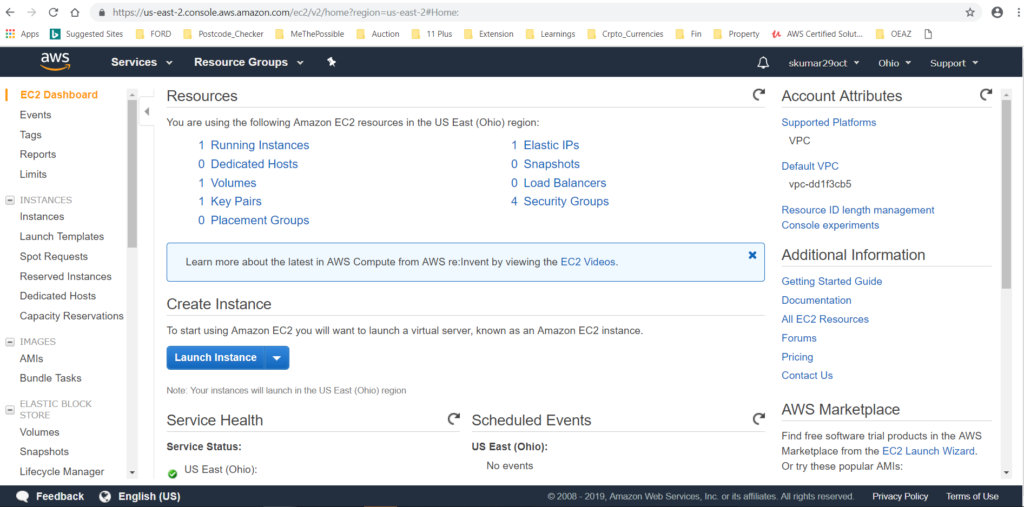

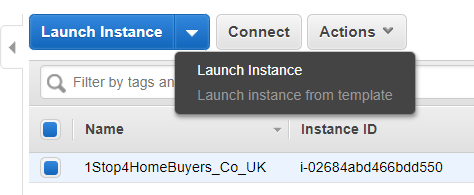

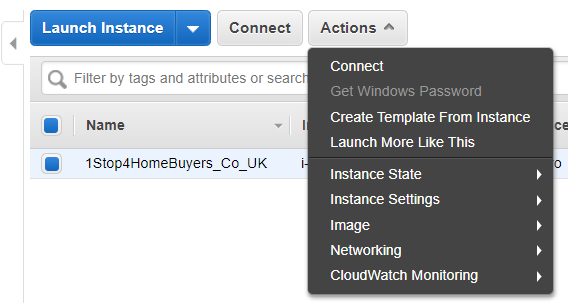

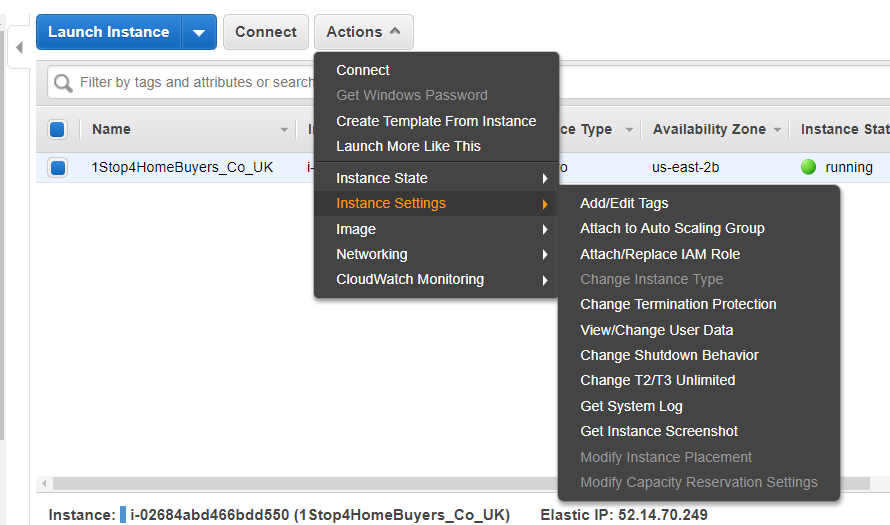

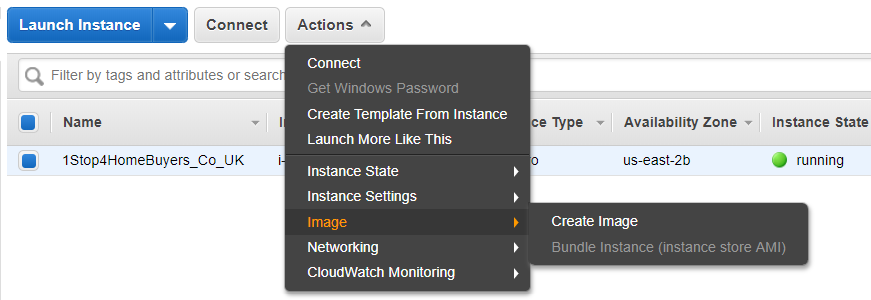

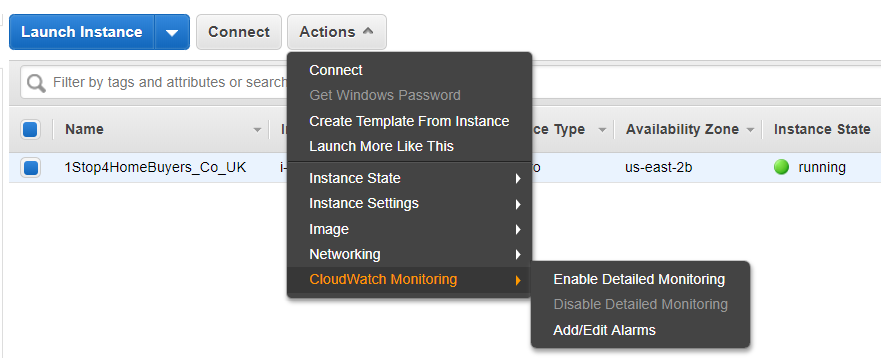

Q: What are the different methods supported to manage EC2?

A: Either via the AWS Web Console, via the API, or via CLI tools.

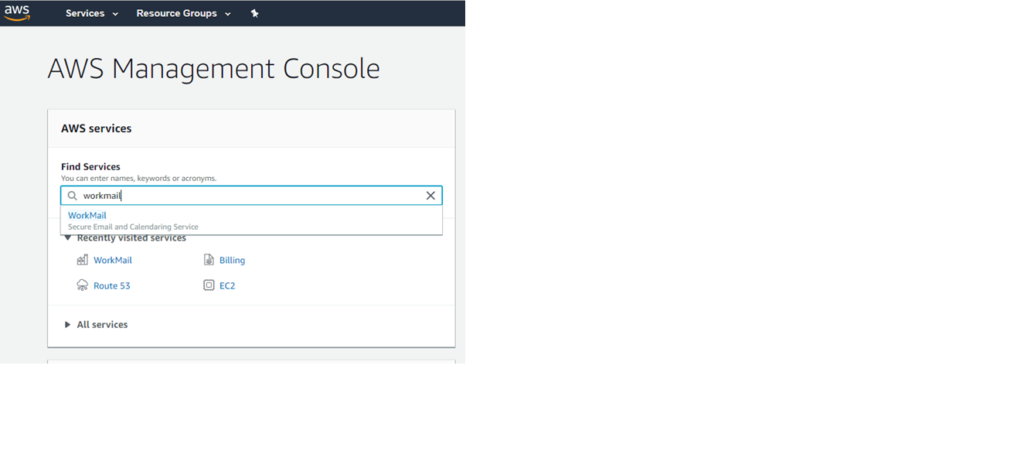

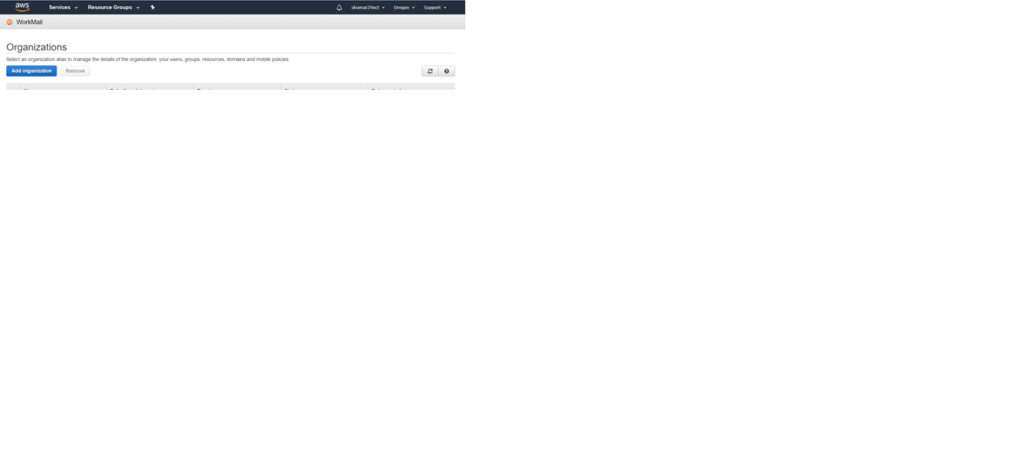

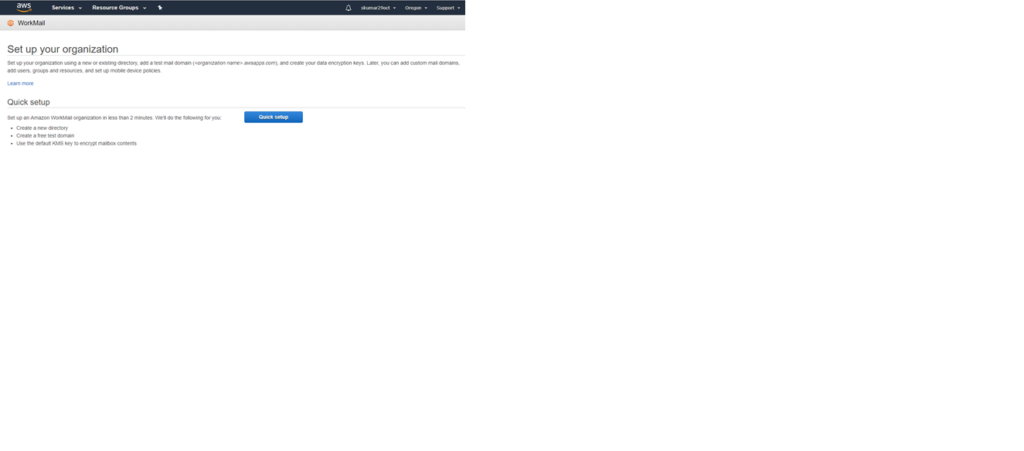

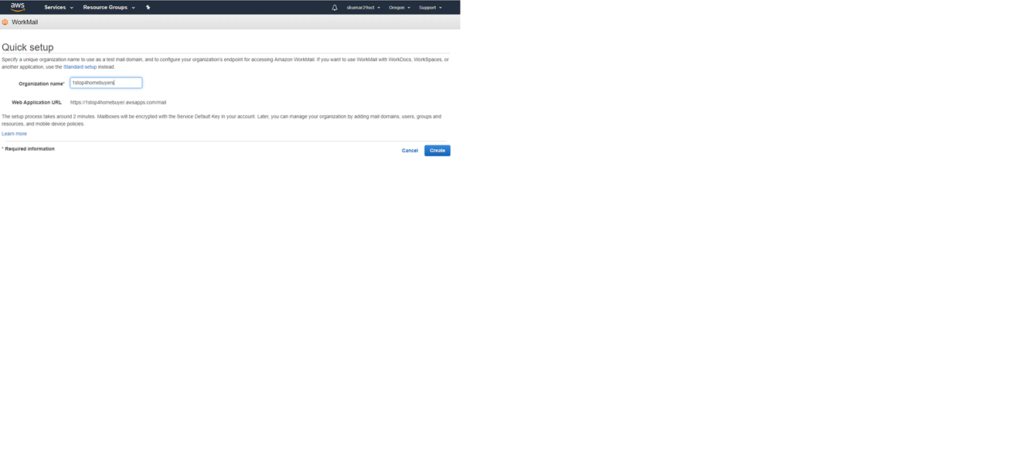

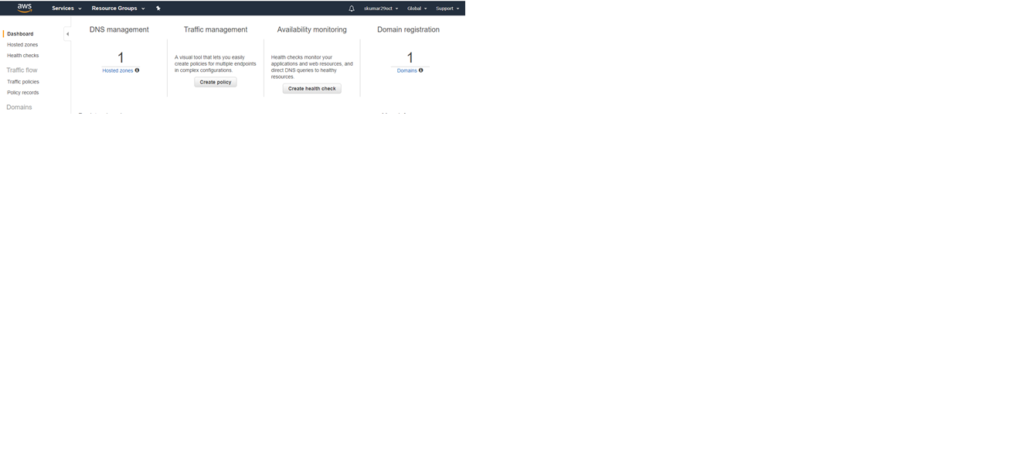

Q: How do I get to the AWS Web Console?

A: https://console.aws.amazon.com/

Q: Where is the EC2 API documented?

A: http://docs.amazonwebservices.com/AWSEC2/latest/APIReference/Welcome.html

A: The AWS Command Line Interface, available under the DFSG-compliant Apache 2 license, can be installed via apt install awscli on Jessie systems and above. Historical note: the original Amazon EC2 API Tools were not DFSG-compliant, but Debian (still) distributes alternate set of DFSG-compliant tools, that are designed to be fully compatible, called euca2ools.

Q: Where can I find the list of Official Debian AMIs?

A: The following page has a list of Official and unofficial Debian AMIs: Cloud/AmazonEC2Image. See also 694035 for work in progress on a machine-readable list.

Q: How can I build my own AMI?

A: Stretch (and later) AMIs are created using the FAI tool using the debian-cloud-images configuration. An introduction into creating customized AMIs based on the FAI configuration can be found on Noah’s blog.

Packer is another popular tool for creating AMIs. It has the ability to integrate with existing configuration management systems such as chef and puppet, and be used to create images based on customizations performed on a running instance.

Anders Ingemann has created a build script for bootstrapping instances, and was used to create the official AMIs for jessie and earlier. The script can be automated as it needs no user interaction. Custom scripts can be attached to the process as well. You can download or clone the script from github. Any bugs or suggestions should be reported via the github issue tracker. The script is packaged and will be available for install starting with Debian Wheezy.

Also refer to Amazon’s documentation on this topic.