Different options for expdp/impdp.

As you know expdp is a logical backup not the physical one. It works in same version also you can expdp/impdp from lower to higher version. Oracle is very restricted expdp/impdp from higher to lower version.

There are few important things you should keep in mind.

1. Create directory, if already not, and ensure relevant user got grant to read, write the directory. This directory will be used to store the dump and log file.

CREATE OR REPLACE DIRECTORY DIR_NAME AS ‘/ora01/db/oracle/’;

GRANT READ, WRITE ON DIRECTORY DIR_NAME TO scott;

2. Whenever you need to export online database/schema ensure you will use CONSISTENT=Y (in 11.2). If need you can use SCN or time using option FLASHBACK_TIME.

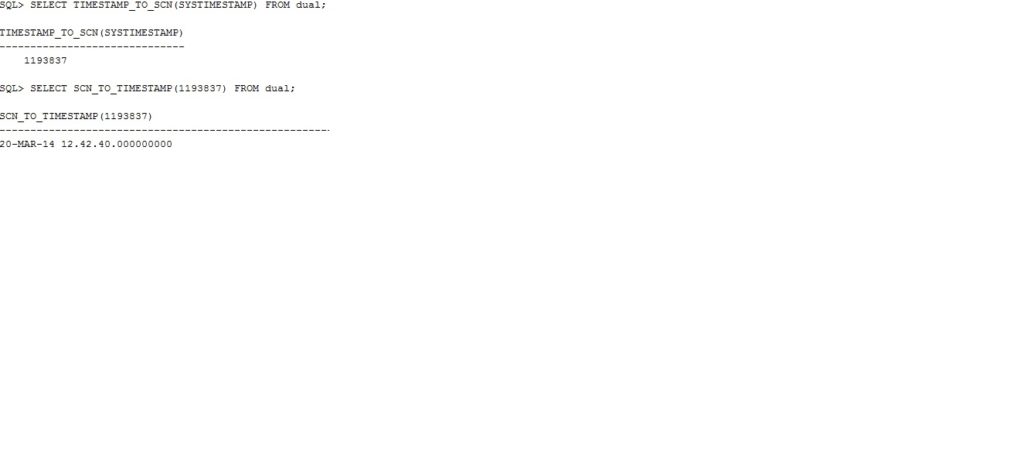

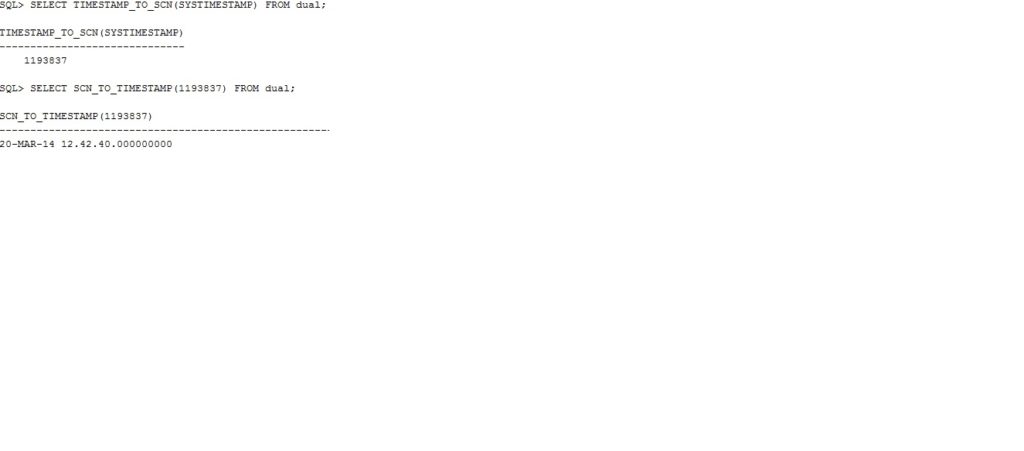

Check SCN/TIMESTAMP:

OR use below syntax. It will ensure your expdp will be consistent.

Note: It has been used many time successfully.

expdp \"/ as sysdba\" FLASHBACK_TIME=\"TO_TIMESTAMP\(TO_CHAR\(SYSDATE,\'YYYY-MM-DD HH24:MI:SS\'\),\'YYYY-MM-DD HH24:MI:SS\'\)\"

1. Estimate the dump size without real export.

expdp \'/ as sysdba\' directory=DATAPUMP_DIR full=y ESTIMATE_ONLY=y

2. Export/Import without password.

Note: With Flashback option.

expdp \"/ as sysdba\" FLASHBACK_TIME=\"TO_TIMESTAMP\(TO_CHAR\(SYSDATE,\'YYYY-MM-DD HH24:MI:SS\'\),\'YYYY-MM-DD HH24:MI:SS\'\)\" directory=DIR_NAME full=y dumpfile=full_DB.dmp logfile=full_db.log compression=all

Note: Without flashback option

expdp \'/ as sysdba\' directory=DATAPUMP_DIR full=y

3. Database full export and import.

expdp user/pwd DIRECTORY=DIR_NAME DUMPFILE=full_db.dmp logfile=full_db.log FULL=y

4. Schema/s export and import.

expdp user/pwd DIRECTORY=DIR_NAME DUMPFILE=full_db.dmp logfile=full_db.log schemas=SCHEMA1,SCHEMA2

5. Check the progress of export/import.

column owner_name format a10

select * from dba_datapump_jobs;

Sample Output:

set linesize 320

column username format a10

select t.username,

t.START_TIME, t.LAST_UPDATE_TIME 'YYYY-MM-DD HH:MM:SS', t.TIME_REMAINING,t.ELAPSED_SECONDS,

t.opname,t.sofar,t.totalwork from V$SESSION_LONGOPS t where/* t.USERNAME = 'user_name' and*/ t.TARGET_DESC = 'EXPORT' ;

Sample Output:

6. Transportable tablespace export and import.

Notes:

1. You must make the tablespace/s read-only before export.

2. You should ‘TRANSPORT_FULL_CHECK=Y’ to ensure tablespaces are self-contained. Means there is no other tablespace holding dependant object/s.

ALTER TABLESPACE tblspace1 READ ONLY ;

ALTER TABLESPACE tblspace2 READ ONLY ;

EXPDP system/password DIRECTORY =DIR_NAME DUMPFILE=trns_tbl.dmp LOGFILE=trns_tbl.log TRANSPORT_TABLESPACES=tblspace1,tblspace2 TRANSPORT_FULL_CHECK=Y

ALTER TABLESPACE tblspace1 READ WRITE ;

ALTER TABLESPACE tblspace2 READ WRITE ;

7. Table export and import.

Export:

expdp scott/tiger tables=EMP,DEPT directory=DIR_NAME dumpfile=EMP_DEPT.dmp logfile=expdpEMP_DEPT.log

Import:

impdp scott/tiger tables=EMP,DEPT directory=DIR_NAME dumpfile=EMP_DEPT.dmp logfile=impdpEMP_DEPT.log

8. Parallel export and import to improve the performance.

Export:

expdp scott/tiger directory=DIR_NAME parallel=4 dumpfile=fulldb_%U.dmp logfile=exfulldb.log full=y

Import:

impdp scott/tiger directory=DIR_NAME parallel=4 dumpfile=fulldb_%U.dmp logfile=imfulldb.log

9. EXCLUDE tables and schemas from a full database export/import.

Notes:

1. You need not prefix the owner name. If you put the OWNER.TABLE_NAME it would not work.

2. It will EXCLUDE all tables mentioned in the list even if more than one owner has the same object name.

For example: If TABLE_NAME1 table is owned by USER1 and USER2 its table will be EXCLUDED from both.

3. Below command will work only in the parameter file, not in the command line.

expdp_exclude_tables_n_users.par

DIRECTORY=DIR_NAME

DUMPFILE=dump_name.dmp

LOGFILE=log_file_name.log

FULL=Y

EXCLUDE=STATISTICS

EXCLUDE=TABLE:"IN ('TABLE_NAME1','TABLE_NAME3','TABLE_NAME3')"

EXCLUDE=SCHEMA:"IN ('SCHEMA1', 'SCHEMA2')"

-- END expdp_exclude_tables_n_users.par

Command line syntax of above par

expdp system/password DIRECTORY=DATA_PUMP_DIR DUMPFILE=abc.dmp FULL=Y

EXCLUDE=TABLE:\"IN \(\'TABLE_NAME1\', \'TABLE_NAME2\' , \'TABLE_NAME3\')\"

EXCLUDE=SCHEMA:\"IN \(\'SCHEMA1\', \'SCHEMA2\'\)\"